Prior to starting a historical data migration, ensure you do the following:

- Create a project on our US or EU Cloud.

- Sign up to a paid product analytics plan on the billing page (historic imports are free but this unlocks the necessary features).

- Raise an in-app support request with the Data pipelines topic detailing where you are sending events from, how, the total volume, and the speed. For example, "we are migrating 30M events from a self-hosted instance to EU Cloud using the migration scripts at 10k events per minute."

- Wait for the OK from our team before starting the migration process to ensure that it completes successfully and is not rate limited.

- Set the

historical_migrationoption totruewhen capturing events in the migration.

Migrating data from Matomo is a two step process:

- Exporting data via Matomo's API

- Converting Matomo event data to the PostHog schema and capturing in PostHog

1. Exporting data from Matomo

Matomo provides a full API you can get data from. To get the relevant event data, we make a request to the Live.getLastVisitsDetails method.

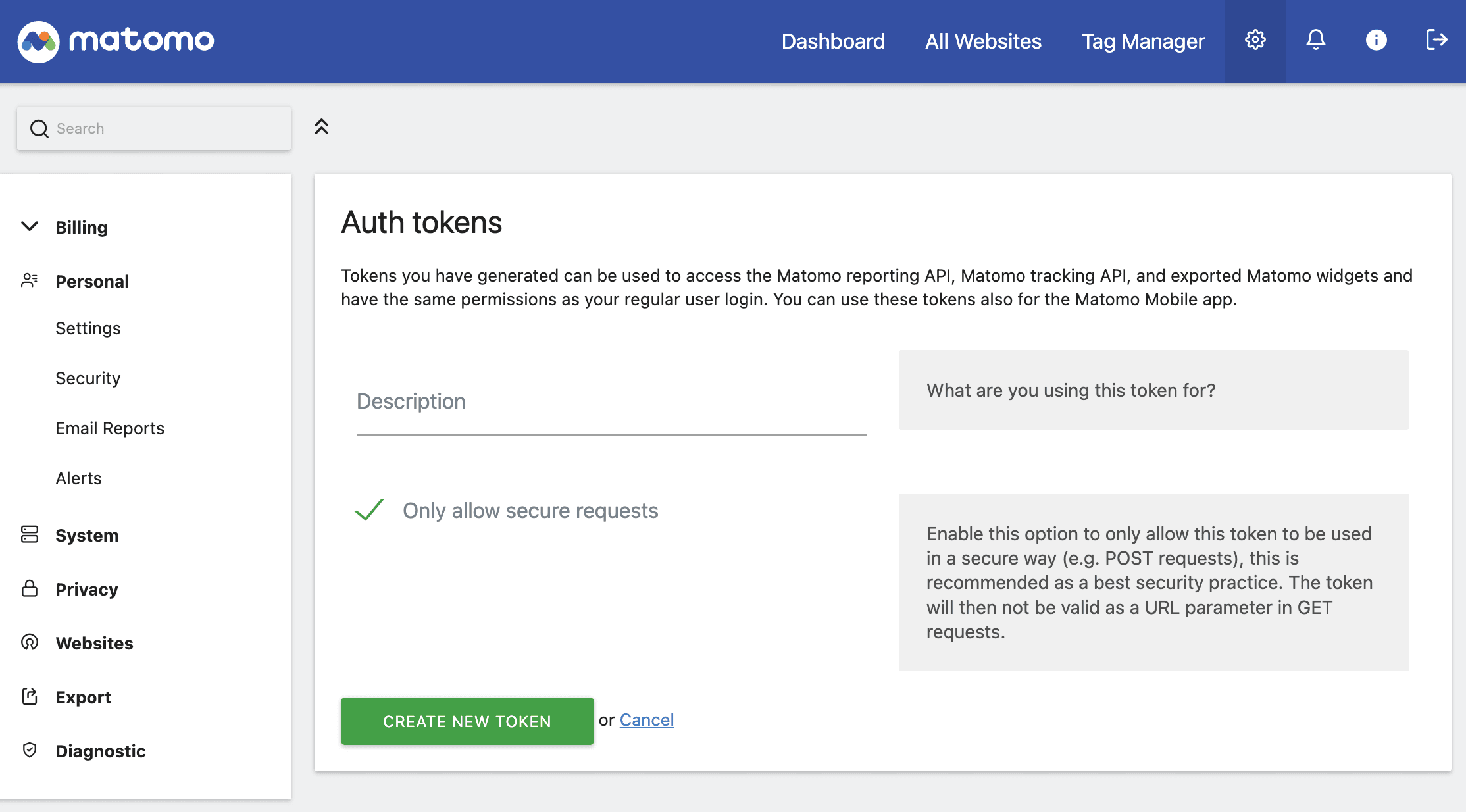

To access the API, you need to create an API token. This can be done in your instance's security settings.

With this token, you can make a request to get your event data and save it as a JSON file. This example gets 10,000 events from the first half of 2024:

To return all rows, set the filter limit to -1. Matomo recommends you only export 10,000 rows at a time. If you have more than that amount, you can use a combination of filter_limit and filter_offset to get sets of rows.

2. Converting Matomo event data to the PostHog schema

The schema of Matomo's exported event data is similar to PostHog's schema, but it requires conversion to work with the rest of PostHog's data. You can see details on Matomo's schema in their docs and events and properties PostHog autocaptures in our docs.

The big difference is that Matomo structures its data around visits that contain one or more actions. Matomo's actions are similar to PostHog's events. You can go through each visit and convert it to PostHog's schema by doing the following:

Converting properties like

operatingSystemVersionto$os_version.Omitting properties that aren't relevant like

visitEcommerceStatusIcon,plugins,timeSpentPretty, and many more. Matomo includes many more properties on their events than PostHof does.Looping through the

actionDetails, converting:- Properties like

urlto$current_url - Action types like

actionto event names like$pageview - Action

timestampto an ISO 8601 timestamp

- Properties like

Add visit properties to action properties.

Once this is done, you can capture each action into PostHog using the Python SDK or the capture API endpoint with historical_migration set to true.

Here's an example version of a Python script:

This script may need modification depending on the structure of your Matomo data, but it gives you a start.